Should we worry about AI...or about humans?

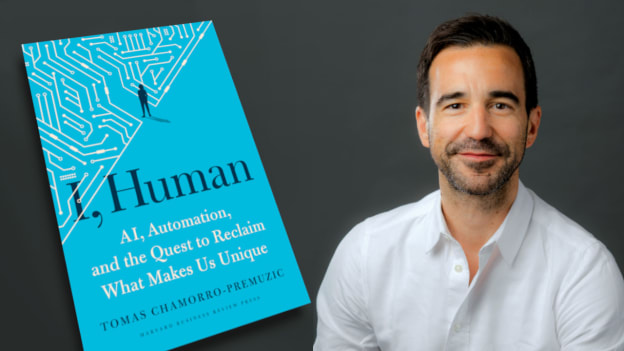

Artificial intelligence has fascinated us through the history of computing, even becoming the centre of popularised fears that it will replace us. But it's not AI that we should be worried about; it's us, the humans who build it and use it and work and play with it. That is the warning conveyed by 'I, Human', the new book by noted international social scientist Dr Tomas Chamorro-Premuzic, Chief Innovation Officer of ManpowerGroup.

Throughout 'I, Human', Dr Chamorro-Premuzic contends that the danger of AI lies not in the technology itself, but in humanity's own inability to self-regulate how we respond to it – how easily we shape our behaviour to the algorithms in ways that ultimately regress our intellectual, emotional, and social development.

In conjunction with the release of 'I, Human' on 28 February, People Matters met with Dr Chamorro-Premuzic for a conversation in Singapore about the place he sees AI taking in today's world and what impelled him to raise a red flag.

Here are the highlights of the discussion, edited for brevity.

Reading your new book, I get a distinct sense of pessimism about what an AI-powered future will look like. You don't think that society at large is capable of the level of self-awareness and self-regulation required to live and work with AI?

Exactly. We often focus on the impact that AI has on driving conspiracy theories and the role it plays in specific cases, whether it's Brexit or Trump's election, but we forget that everyday users are also being influenced by just the normal algorithms that inhabit social media and other platforms.

Millions of people in the world are ordinarily dependent on the content they get on these platforms, and the algorithms are optimised for making these people a more exaggerated version of themselves because they suppress any information that is discrepant, or discordant, with what they think. It's like living in a town or working in an office where people only tell you what you want to hear all the time. How can that make you more open minded?

AI is the perfect self enhancement tool, because it makes us feel better about ourselves. You cannot fully blame it because it's built to successfully exploit human weakness: the desire to feel right, as opposed to the desire to understand what goes on.

Are we giving too much credit to the present-day applications of AI?

The AI business model is predicated on the value we attach to prediction. Look at how recently Google Bard managed to wipe out $100 billion of market value, just because of one error, and how the market immediately assumed that one product must be better than the other.

But while these algorithms have been very impactful, shaping our lives, shaping our choices, I think the market has so far overestimated how well they know us. Because they can only predict us within a narrow realm. Spotify can predict my music really, really well; everything that Netflix recommends for me is an attractive choice. But even if they can do this, it's only within the very specific range that each platform covers. You'd have to stitch together all of the models that all of these different platforms have of us to get to something that gets close to our full human model.

Is it really such a good thing, this ability to predict people's preferences on such a personal level?

You can optimise your life for efficiency. But it doesn't necessarily make it better. The reality is, if we outsource most of our choices to others to make our lives more efficient, there are very few opportunities to make mistakes.

And I think inevitably, that translates into making us more robot-like because everything is optimised for our past preferences. It's designed to squeeze out unexpected choices.

And it gets worse with age. When you're young, you should be rebellious, nonconforming, dreaming of breaking rules and changing the world. For the vast majority of people, as they grow older, they become more conservative. In fact, the meaning of conservative comes from preserving your beliefs and ideas. Because the more experience you have, the more immune you become to anything that challenges your knowledge. But if we're indoctrinating people for homogeneous thinking from a young age, and they're not rebellious and nonconforming at a young age, they will only get even more conservative later.

How is this different from what young people are already doing, though? They will tend towards homogeneity in their social groups anyway, whether due to social pressure or because it's enforced by educational institutions.

The difference is that they are spending most of their time producing data that feeds AI, and getting more and more detached from the real world. That has never happened before. You could argue, within the digital revolution or the AI age, people may choose to go to this platform or that platform and within it, they can still express their preferences for this trend or that option. But that does not change that the overwhelming majority of people are still spending more time in the online universe, on AI platforms, than in the real world.

So how do we break them away from it? Are schools going to have to start teaching children how to rebel against the Matrix?

They're going to have to help students understand how to cultivate the behaviours that make them human. The power of education has changed. Having knowledge and transmitting knowledge or information to students – that now has been commoditised. There is no need for a student to go to class to learn something. AI really is better than the vast majority of teachers. It's always there. It's free. You can ask it anything. Its answers might be correct or not – just like the teacher's.

It's even less biased, or more politically correct, than humans, on anything that is remotely controversial. You can ask it about controversial figures like Trump, and it will say something like “Some people say he is a good influence, some people say he is a bad influence...”

The problem we have with technology is how to live, how to relate to others. What to do with our empathy, our curiosity, our creativity.

And I think it's inevitable that we're going to have to help young people in the next generations understand how they can become better people with these technologies, and not worse.

Pretending the technologies don't exist or that they're going to go away is very naive. Treating them as an enemy is counterproductive. The question is, how can you leverage this? And you have to recognise that some people might succeed and others might get lost in addictive, non-educational components. That's the beauty of human individuality: given the same tool, we're going to interact with it in different ways. And education has to help people make the most of these interactions.

What about deep knowledge, deep skills? Will AI ever be able to substitute for those?

It's a great tool to see what the vast majority of people think on average. If you want to know what the consensus is, AI is a very quick way to draw information. But an expert opinion is not just about what everybody thinks. A consensus is not a substitute for an educated specialist perspective. So what I worry is that AI is a really good excuse to become lazy and say, “Oh, I don't need to study anything. If you ask me anything about something, I'll go to ChatGPT.”

Two minutes is no substitute for spending three months or three years understanding something.

Where can AI actually improve people and organisations, then?

The area that I'm most actively interested in is how we can use this to de-bias organisations and increase meritocracy. The average hiring manager and the average recruiter has limits to their ability to understand human potential. And this is even harder when they have to take into account what jobs are going to exist in five years. How are they to help this person with skills and education that are not relevant today be an active contributor in the economy?

All of these things require data, and AI is the best tool to translate the data into insights. Even more so in the field of diversity and inclusion, which by the way, are mostly limitations. Let's say that an organisation is very committed to increasing diversity and inclusion. But people are still relying on gut feel or intuition. It's great that hiring managers and recruiters are getting unconscious bias training, but no matter how many hours of training you go through, your brain can never un-know what somebody looks like: man, woman, young, old, rich, poor, attractive.

But an algorithm can be trained to only focus on the things that matter and ignore all the things that are irrelevant. So I'm rationally optimistic about the opportunity and the potential in that.

Although I'm less optimistic about humans being open minded enough to do it. Some people call me cynical, and I'll tell you why. If you had an X-ray that can go into an organisation and understand what really goes on and the value of the work being done, it will reveal the gap between how successful people are and what they actually contribute. It can help people who have been unfairly overlooked. But the people who are not contributing are of course afraid of being called out. So I think a lot of the opposition against AI is coming from that group.

About opposition against AI – what do you think of the concerns around AI ethics, or the fears that AI will destroy jobs?

Technology automates tasks, and AI is no exception. That creates so many other jobs. When copywriters or researchers or generalists are automated, those jobs automatically become ethical AI specialists. The question is, how do we reskill and upskill people so that they can have access to and benefit from all the jobs that are created? Because some jobs will always be destroyed. And in many places, inequality increases because fewer people in the population have the skills to access the jobs that are created.

This is the real risk. This is the main social challenge of the times.