Learning enablers in a hyper-connected world

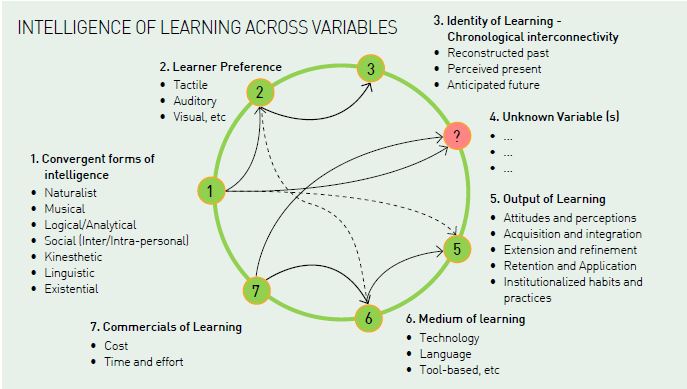

Learning as a sub-set of intelligence (the other elements of intelligence being capacity for logic, self-awareness, planning, creativity, problem solving, etc.) has been re-defined in the world of futurism, context and change. The intelligence of learning therefore spans across several variables – and we do not have enough epistemological validation to determine how many variables there are, to learning. But that is simpler of the issues.

Whereas the world is obsessed with the 4th Industrial revolution (4IR) around convergence of Artificial Intelligence, Machine-learning, IoT, there is real intelligence that is slipping past in all of this – the ability of the learner-system interface to manage complexity arising out of the multiple variables and also irrational patterns and non-linear problems.

The larger challenge it poses however, is the assumption that machines will help us do that.

Leave intelligent machines for a while. Flynn effect related to measurement of rise in human IQ has been questioned by James Flynn himself, on the ground that very large increase in IQ does not measure intelligence but only a minor sort of abstract problem-solving ability with little practical significance. Additionally, simple reaction-time measures that correlate substantially with measures of general intelligence (g) and are considered elementary measures of cognition, have been found to have declined by around 1.16 points per decade, through a meta-analysis of 14 age-matched studies from Western countries between 1889 and 2004, according to a study by Woodley, Nijenhuis and Murphy.

As a human resource professional, my concern is less about how many jobs will be erased due to automation, and more in tracing general intelligence (g) in employee life-cycle processes related to personnel selection and talent management, due to it its pervasive utility in work-settings because it is essentially the ability to deal with cognitive complexity. I do not see a near-future where artificial intelligence and machine-learning will help complex Union negotiations, engagement at workplace through metadata-input on previous success, change in motivational processes leading to constructive leadership behavior. Alan Turing’s statement in London Mathematical Society in February 1947 on Artificial Intelligence (“… It would be like a pupil who had learnt much from his master, but had added much more by his own work…”) has a large element of assumption. Developing an equivalent of Caudate Nucleus or Basal Ganglia is not easy, because human brains learn through the variables cited above including genetic construction.

Since human intelligence created machines to do jobs, jobs being taken by machines appear to be a ‘circular reference’ error. As more jobs get automated, more jobs can be created to keep it automated. Organizations that have implemented HR technologies at some point in time to rationalize FTEs, have also down the years created employment through ‘diversity’, ‘HR data analytics’, ‘Employee Wellness’ practice-areas and applications at workplace. The fact is, ‘linear and standard operating procedures’ that was thought of as automatable, may actually be non-linear and non-standard. Two close-to-home possibilities and examples:

1) Payroll automation has freed transactional elements of a job (read linear and standard in this case) to be able to create opportunities and deeper focus on benefits program design that has resulted in custom-payroll solutions which are not standard.

2) Data shows automated query-resolution machines (tier-1 in customer query systems) that has had years of ‘possible responses’ programmed into it, under more than 73 percent cases, lead to a tier-2 escalation requiring a ‘manned’ response. Though an inherent assumption in this case is that at least 27 percent of the jobs can be done away with, tier-2 escalations result in higher customer dropouts due to the tier-1 experience of automated query-resolution (or lack of it). Users complain of going through the frustration of a programmed half-intelligent system that cannot ‘converse’ and has no ‘aesthetics’ required to interact (Intelligence, to my earlier reference on Gardner, has ‘Social’ (Inter and Intrapersonal) intelligence as a subset).

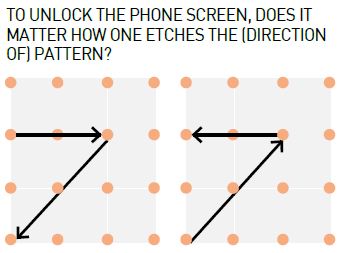

In a small conference-room, experiment related to pattern-recognition (which is a significant element of AI and in general, problem-identification), I had asked multiple participants on their expectation of unlocking the phone screen through a pre-set pattern set by the user of the phone. To unlock the phone screen, should it matter on how one etches the (direction of) pattern?

The assumption that “…certain initial instruction tables, so constructed that these tables might on occasion, if good reason arose, modify those tables…” is a very large one. It also somewhere risks assumptions of aesthetics being lesser in importance to structure (in paintings), emotions being lesser in importance to process (in social interactions), distortions being lesser in importance to notes (in rock music), volume being more important than essence (in literature) and artificial intelligence willing, people-line being less important than top or bottom lines (in business).

There seems to be a paradox and inherent contradiction in the thought process that we need Artificial Intelligence and machine-learning to aid our process of learning, when the rate of build-up of that intelligence, either through the auto-evolution of information inside the machine or through ongoing external inputs, is slower than the rate of change around us – and is bound to be so.

There are absolute possibilities, however, of magical and deliberate outcomes that result in increased productivities and efficiencies, faster learning, wider exposures, etc. We need to know the solution however, to the following school-level problem:

There is a train that is moving towards a station. At any point in time its speed is same as its distance away from the station. When will it reach the station?

Machine-enabled learning to be able to combine optimal number of variables resulting in “transfer” (application of skill, knowledge or understanding to resolve a novel problem or situation) will take the same time as the answer to the above problem.

Rush on conversations related to Learning in the 4IR environment has possibilities of mistaking novelty as innovation, and intelligence to be artificial.

There will always be a lag between artificial intelligence and human intelligence, the latter going first.

Learning and Development will therefore strategically focus more on the following, in organizational and employee life-cycle management:

1) Reduce information-overload from a hyper-connected world of IoT, to provide targeted inputs on situational learning (and applications) through a convergence of multi-domain intelligence for possible and reasonable solutions to a problem.

2) Non-linear and aperiodic sequence studies applied to talent turnover and predictability of successful engagement.

3) Pattern recognition techniques over longitudinal periods for sustainable decision-making.

4) Irrational decision processes and image-generators to synchronize heterogeneous team dynamics.

5) Exceptionally simple technology applications that change behavior.

6) Custom-learning content that is congruent with actual abilities, skills and knowledge; and pivoting on reflectiveness, prior and emerging experience, creativity, originality and imagination.

Technology is a great learning aid, but does not change the fundamentals of learning. Reading through a digital medium has 20-30 percent performance deficit than reading through a paper-book (Kak,Muter, Wright and Lickorish et al), lesser accuracy (defined in different ways by Creed, Wilkinson, Robinshaw) for cognitively-demanding tasks, causes higher fatigue and ocular discomfort. Technology-enabled learning possibly does not impact comprehension (Gould, Egan, Muter and Maurutto, et al), but my own experience shows lesser retention (that maybe because we grew up reading from non-digitized interfaces).

If one wishes to go to the extreme of assuming that Artificial Intelligence and Machine Learning is the way to go, one would be liable to find that it is gained at a price of intolerable loss of intelligence itself. For a true learner, therefore, learning happens at a museum, with a machine-interface, over a run and work-out, story-telling with children, 3600 social and official interactions, playing/listening to music, setting up a camp, controlling self-behavior, etc. There can hardly be anything artificial in this.